Google testing accessibility feature that will let Android users control features with a smile

Google testing accessibility feature that will allow disabled users to control Android phone features with smiles, raised eyebrows and other facial gestures

An update to Android 12 beta’s Android Accessibility Suite allows users to task gestures to scrolling forward, getting notifications, and other functionsUsers can select a combination of smiles, raising their eyebrows, opening their mouths, and eye glancesThe camera’s phone scans gestures and assigns them to an action of your choiceYou can control the sensitivity of the system to avoid accidental activation The feature could be useful for Android users with arthritis or other mobility issues that make hand gestures used on touchscreens difficult

Google is testing a new accessibility feature on Android phones that allows users to control functions by using facial gestures instead of touching its screen.

Part of the Android Accessibility Suite app, it allows users to connect an external device and control a number of functions, like ‘scroll forward,’ ‘scroll back,’ ‘home’ and ‘notifications.’

Users gestures are scanned by the phone’s camera and assigned to an action of their choice.

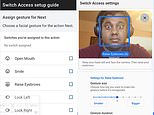

Right now the list of usable facial gestures includes ‘Open Mouth,’ ‘Smile,’ ‘Raise Eyebrows,’ ‘Look Left,’ ‘Look Right’ and ‘Look Up.’

An upgraded version of the ‘Camera’ Switch Access program was included as part of the Android 12 Beta 4 release, made available to Pixel phones on August 11, XDA Developers first reported.

Though the list is likely to grow, users in ‘Camera Switches’ mode can currently task a combination of gestures to perform the following functions:

Pause Camera SwitchToggle auto-scan (disabled)Reverse auto-scanSelectNextPreviousTouch & holdScroll forwardScroll backwardHomeBackNotificationsQuick SettingsOverview

The feature could be useful for Android phone users with Parkinson’s, arthritis, or other mobility issues that make the subtle hand gestures used on most smartphones difficult.

To limit accidental activations, you can adjust how sensitive the software is when recognizing expressions, The Verge reported, for example how wide you open your mouth or for how long.

Scroll down for video

A feature in the latest update to the Android Accessibility Suite app allows users to task certain functions to facial gestures, like smiling, opening their mouths, raising their eyebrows and looking up

The feature is power-intensive, however, and Google recommends phones be plugged in and charging while it’s being operated.

The upgrade is just the latest effort Google has made toward improved accessibility: In December 2020, it launched Look to Speak, an app that tracks eye movements to scan a list to find a desired phrase, which is then spoken aloud by an automated voice.

The app is aimed at people with speech and motor impairments and works when the front-facing camera on the smartphone has a clear view of the user’s eyes.

In October, it rolled out a feature for Android phones that notifies deaf users if there is an alarm going off, water running, a dog barking, or other ‘critical sounds,’ via push notifications, vibrations, or a flash from their camera light.

A user smiles (left) and raises their eyebrows for their Android phone ‘s camera, before tasking the gesture to one of any number of functions, like ‘scroll back,’ notifications,’ and ‘home.’ You can adjust how sensitive the software is, for example how wide you smile or for how long

The feature can benefit users with arthritis or other mobility issues that make fine manual gestures to operate a touchscreen difficult

While Google said Sound Notifications is designed for the estimated 466 million people in the world with hearing loss, it can also help people wearing headphones or otherwise distracted.

Developed with machine learning, it can alert users to up to ten noises—including baby noises, shouting, water running, smoke and fire alarms, sirens, appliances beeping, door knocking or a landline phone ringing.

In April 2020, Google introduced the ‘Talkback braille keyboard,‘ a virtual keyboard that lets visually impaired users send messages and emails without additional hardware.

Right now the list of usable facial gestures includes ‘Open Mouth,’ ‘Smile,’ ‘Raise Eyebrows,’ ‘Look Left,’ ‘Look Right’ and ‘Look Up.’

Integrated directly into Android, ‘it’s a fast, convenient way to type on your phone. .. whether you’re posting on social media, responding to a text, or writing a brief email,’ the company said in a statement at the time.

In 2019, Google introduced two accessibility options: Live Transcribe, which turns speech into text in real time, and Sound Amplifier, designed to filter out ambient noise beyond the scope of a phone conversation.

![]()